I have never liked how companies advertise cameras using megapixels. Mostly because it is quite deceptive, and prompts people to mistakenly believe that more megapixels is better – which isn’t always the case. But the unassuming amateur photographer will assume that 26MP is better than 16MP, and 40MP is better than 26MP. From a purely numeric viewpoint, 40MP is better than 26MP – 40,000,000 pixels outshines 26,000,000 pixels. It’s hard to dispute raw numbers. But pure numbers don’t tell the full story. There are two numeric criteria to consider when considering how many pixels an image has: (i) the aggregate number of pixels in the image, and (ii) the image’s linear dimensions.

Before we look at this further, I just want to clarify one thing. A sensor contains photosites, which are not the same as pixels. Photosites capture light photons, which are then processed in various ways to produce an image containing pixels. So a 24MP sensor will contain 24 million photosites, and the image produced by a camera containing this sensor contains 24 million pixels. A camera has photosites, an image has pixels. Camera manufacturers use the term megapixel likely to make things simpler, besides which megaphotosite sounds more like some kind of prehistoric animal. For simplicities sake, we will use photosite when referring to a sensor, and pixel when referring to an image.

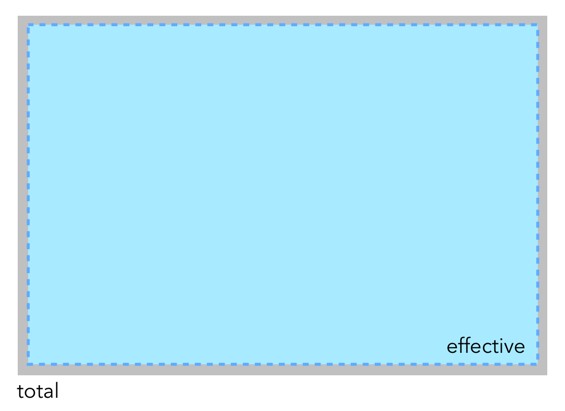

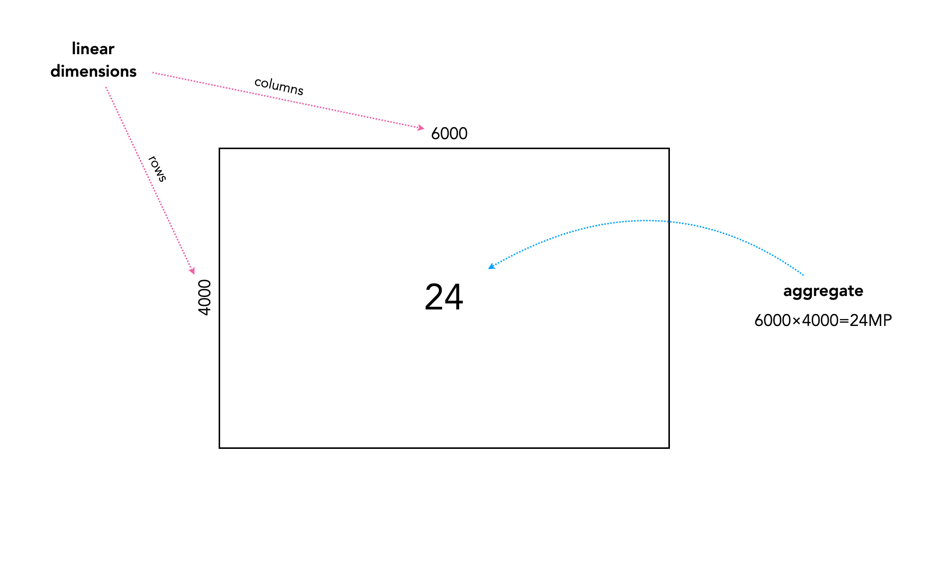

Every sensor is made up of P photosites arranged in a rectangular shape with a number of rows (r) and a number of columns (c), such that P = r×c. Typically the rectangle shape of the sensor forms an aspect ratio of 3:2 (FF, APS-C), or 4:3 (MFT). The values of r and c are the linear dimensions, which basically represent the resolution of the image in each dimension, i.e. the vertical resolution will be r, the horizontal resolution will be c. For example in a 24MP, 3:2 ratio sensor, r=4000, c=6000. The image aggregate is the number of megapixels associated with the sensor. So r×c = 24,000,000 = 24MP. This is the number most commonly associated with the resolution of an image produced by a camera. In reality, the number of photosites and the number of pixels are equivalent. Now let’s look at how this affects an image.

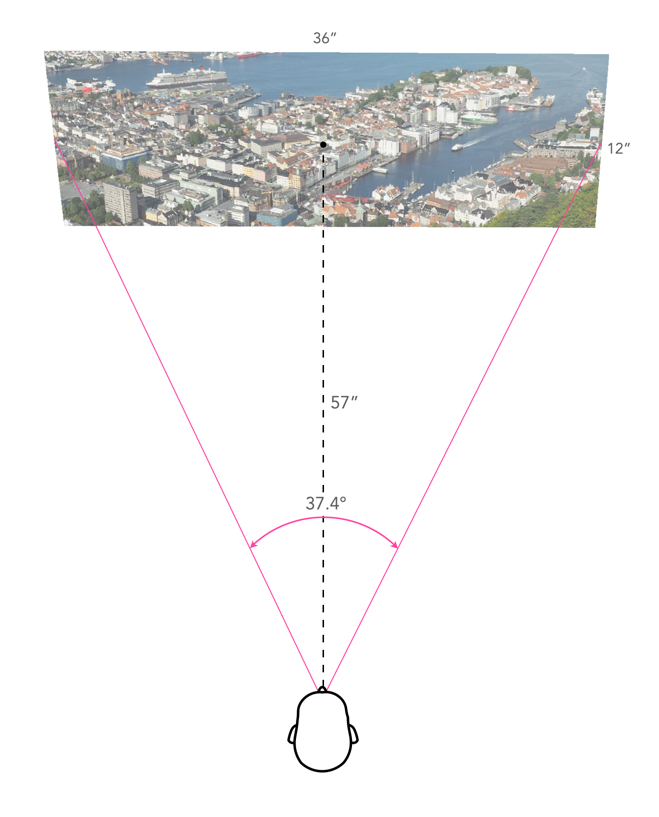

The two numbers offer different perspectives of how many pixels are in an image. For example the difference between a 16MP image and a 24MP image is a 1.5 times increase in aggregate pixels. However due to how these pixels are distributed in the image, it only adds up to a 1.25 times increase in the linear dimensions of the image, i.e. there are only 25% more pixels in the horizontal and vertical dimensions. So while upgrading from 16MP to 24MP does increase the resolution of an image, it only adds a marginal increase from a dimensional perspective. Doubling the linear dimensions of an image would require a sensor with 64 million photosites.

The best way to determine the effect of upsizing megapixels is to visualize the differences. Figure 3 illustrates various sensor sizes against a baseline 16MP – this is based on the actual megapixels found in current Fuji camera sensors. As you can see, from 16MP it makes sense to upgrade to 40MP, from 26MP to 51MP, and 40MP to 102MP. In the end, the number of pixels produced by an camera sensor is deceptive in so much as small changes in aggregate pixels does not automatically culminate in large changes in linear dimensions. More megapixels will always mean more pixels, but not necessarily better pixels.