One of the tricks of modern digital cameras is a little thing called “high-resolution mode” (HRM), which is sometimes called pixel-shift. It effectively boosts the resolution of an image, even though the number of pixels used by the camera’s sensor does not change. It can boost a 24 megapixel image into a 96 megapixel image, enabling a camera to create images at a much higher resolution than its sensor would normally be able to produce.

So how does this work?

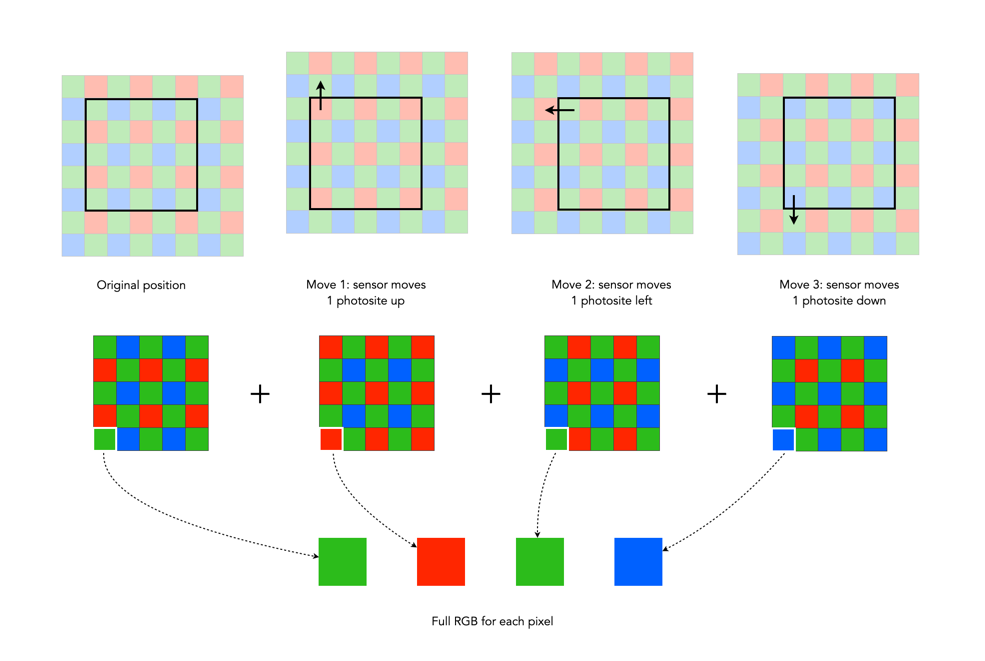

In normal mode, using a colour filter array like Bayer, each photosite acquires one particular colour, and the final colour of each pixel in an image is achieved by means of demosaicing. The basic mechanism for HRM works through sensor-shifting (or pixel-shifting) i.e. taking a series of exposures and processing the data from the photosite array to generate a single image.

- An exposure is obtained with the sensor in its original position. The exposure provides the first of the RGB components for the pixel in the final image.

- The sensor is moved by one photosite unit in one of the four principal directions. At each original array location there is now another photosite with a different colour filter. A second exposure is made, providing the second of the components for the final pixel.

- Step 2 is repeated two more times, in a square movement pattern. The result is that there are four pieces of colour data for every array location: one red, one blue, and two greens.

- An image is generated with each RGB pixel derived from the data, the green information is derived by averaging the two green values.

No interpolation is required, and hence no demosaicing.

In cameras with HRM, it functions using the motors that are normally dedicated to image stabilization tasks. The motors effectively move the sensor by exactly the amount needed to shift the photosites by one whole unit. The shifting moves in such a manner that the data captured includes one Red, one Blue and two Green photosites for each pixel.

There are many benefits to this process:

- The total amount of information is quadrupled, with each image pixel using the actual values for the colour components from the correct physical location, i.e. full RGB information, no interpolation required.

- Quadrupling the light reaching the sensor (four exposures) should also cut the random noise in half.

- False-colour artifacts often arising in the demosaicing process are no longer an issue.

There are also some limitations:

- It requires a very steady scene. It doesn’t work well if the camera is on a tripod, yet there is a slight breeze, moving the leaves on a tree.

- It can be extremely CPU-intensive to generate a HRM RAW image, and subsequently drain the battery. Some systems, like Fuji’s GFX100 uses off-camera, post-processing software to generate the RAW image.

Here are some examples of the high resolution modes offered by camera manufacturers:

- Fujifilm – Cameras like the GFX100 (102MP) have a Pixel Shift Multi Shot mode where the camera moves the image sensor by 0.5 pixels over 16 images and composes a 400MP image (yes you read that right).

- Olympus – Cameras like the OM-D E-M5 Mark III (20.4MP), has a High-Resolution Mode which takes 8 shots using 1 and 0.5 pixel shifts, which are merged into a 50MP image.

- Panasonic – Cameras like the S1 (24.2MP) have a High-Resolution mode that results in 96MP images. The Panasonic S1R at 47.3MP produces 187MP images.

- Pentax – Cameras like the K-1 II (36.4MP) use a Pixel Shift Resolution System II with a Dynamic Pixel Shift Resolution mode (for handheld shooting).

- Sony – Cameras like the A7R IV (61MP) uses a Pixel Shift Multi Shooting mode to produce a 240MP image.

Further Reading:

- Pixel-Shift Shootout: Olympus vs. Pentax vs. Sony vs. Panasonic

- Fuji Pixel Shift Multi Mode

- Pentax Pixel Shift Resolution System II

- Pentax K-1 II’s hand-held ‘Dynamic’ Pixel shift mode is not quite what you think it is

- Is Pentax’s Pixel Shift Technology Worth Using?

- Panasonic Pixel Shift High Resolution: Useful or Useless?