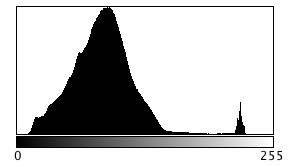

The term ISO (International Standards Organization) is used to describe light sensitivity. In the world of film, ISO relates to film sensitivity – film with high ISO is made with crystals capable of holding more light. The trade-off is that the crystals need to be larger, therefore as ISO increases crystal size becomes more visible, manifesting as film grain. In the digital realm, photosites cannot increase in size, so in low light they record less information. To compensate for a lack of information, the signal is amplified, thereby mimicking film sensitivity.

A low ISO (e.g. 100) setting mimics a low-sensitivity film, so that a longer exposure time, or large aperture setting is required. Conversely a high ISO setting, e.g. 1600, mimics a high-sensitivity film, so allows for a short exposure time (fast shutter speed), or small aperture. Increasing the ISO setting will effectively increase the brightness of the resulting image. Note that changing the ISO has nothing to do with the sensitivity of the photosites, they are by no means affected. This is different to film cameras, where changing the ISO setting is directly associated with the sensitivity of the film. The ISO in a digital camera has everything to do with what happens to the signal after it has been captured by the photosite and converted from light to an electrical signal. The ISO setting determines what happens when the electrical signal passes through an analog amplifier, i.e. it determines how much the signal is amplified (this is known as the gain).

A brightly lit scene will produce a strong electrical signal, which requires less amplification (lower ISO setting), and results in a smoother image with less “grain”. Conversely, less light in a scene means photosites are able to capture less information, and generate weaker electrical signals which have to be amplified (using a high ISO setting). Unfortunately, photosites also capture noise, and changing the ISO will also affect it. For example increasing ISO will increase the amount of noise. This is why photographs taken with a high ISO often have a grainy appearance (attributable to noise). The lower the ISO used, the better the quality of the image will be.