We have talked briefly about digital camera sensors work from the perspective of photosites, and digital ISO, but what happens after the light photons are absorbed by the photosites on the sensor? How are image pixels created? This series of posts will try and demystify some of the inner workings of a digital camera, in a way that is understandable.

A camera sensor is typically made up of millions of cavities called photosites (not pixels, they are not pixels until they are transformed from analog to digital values). A 24MP sensor has 24 million photosites, typically arranged in the form of a matrix, 6000 pixels wide by 4000 pixel high. Each photosite has a single photodiode which records a luminance value. Light photons enter the lens and pass through the lens aperture before a portion of light is allowed through to the camera sensor when the shutter is activated at the start of the exposure. Once the photons hit the sensor surface they pass through a micro-lens attached to the receiving surface of each of the photosites, which helps direct the photons into the photosite, and then through a colour filter (e.g. Bayer), used to help determine the colour of pixel in an image. A red filter allows red light to be captured, green allows green to be captured and blue allow blue light in.

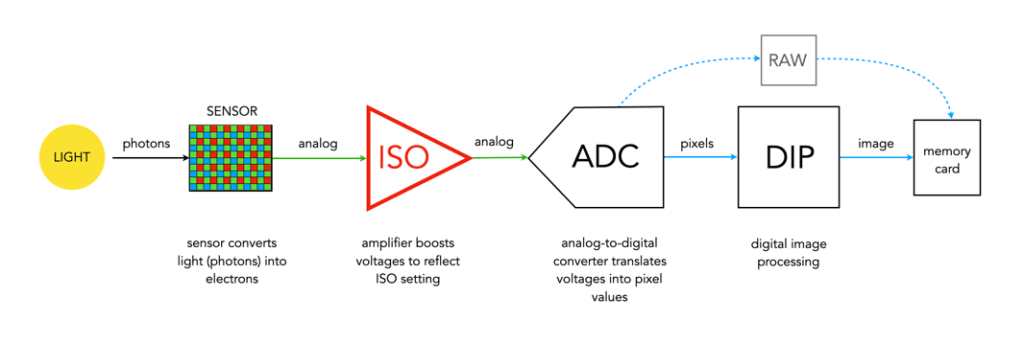

Every photosite holds a specific number of photons (sometimes called the well depth). When the exposure is complete, the shutter closes, and the photodiode gathers the photons, converting them into an electrical charge, i.e. electrons. The strength of the electrical signal is based on how many photons were captured by the photosite. This signal then passes through the ISO amplifier, which makes adjustments to the signal based on ISO settings. The ISO uses a conversion factor, “M” (Multiplier) to multiply the tally of electrons based on the ISO setting of the camera. For higher ISO, M will be higher, requiring fewer electrons.

The analog signal then passes on to the ADC, which is a chip that performs the role of analog-to-digital converter. The ADC converts the analog signals into discrete digital values (basically pixels). It takes the analog signals as input, and classifies them into a brightness level (basically a matrix of pixels). The darker regions of a photographed scene will correspond to a low count of electrons, and consequently a low ADU value, while brighter regions correspond to higher ADU values. At this point the image can follow one (or both) of two paths. If the camera is set to RAW, then information about the image, e.g. camera settings, etc. (the metadata) is added and the image is saved in RAW format to the memory card. If the setting is RAW+JPEG, or JPEG, then some further processing may be performed by way of the DIP system.

The “pixels” passes to the DIP system, short for Digital Image Processing. Here demosaicing is applied, which basically converts the pixels in the matrix into an RGB image. Other image processing techniques can also be applied based on particular camera settings, e.g. image sharpening, noise reduction, etc. is basically an image. The colour space specified in the camera is applied, before the image as well as its associated meta-data is converted to JPEG format and saved on the memory card.

Summary: A number of photons absorbed by a photosite during exposure time creates a number of electrons which form a charge that is converted by a capacitor to a voltage which is then amplified, and digitized resulting in a digital grayscale value. Three layers of these grayscale values form the Red, Green, and Blue components of a colour image.

Pingback: How To Photograph Abandoned Places - Architectural Afterlife

Pingback: Do More Megapixels Improve Image Quality? - J.J. Williamson Photography©

Pingback: How To {Photograph} Deserted Locations - Grandpa Gossip

Pingback: What is a photosite? | Crafting Pixels